Agentic Image Prompting with Midjourney, Flux and other tools

Getting rid of that AI look

Inspired by the signature, ‘branded’ look of series like Severance or Breaking Bad – I started an experiment.

First tried chatGPT:

”confirm you understand how to 1. use text to image prompts with midjourney and flux 2. understand how to coloring and production design works and how it's becomes signature to series like breaking bad, severance. the color usage, color palette, angles, film stock, lighting, etc”

After chatGPT gathered some online sources, I instructed it further:

now, without copying these tv-series, write made up scenes with remarkable movie product design and coloring, find out never before seen, unique color combinations, lighting, compostions etc that could be a base for a new series. you can go to extremes

The result was far from convicing, still the same cheesy AI look:

3rd try:

”continue going even deeper, do not follow your dataset and typical movie industry cliches, but combine the most unusal stuff from all over the human knowledge”

Already better, but I wanted more, chatGPT is relying too much of the midjourney aesthetic style, that’s baked into these kind of tools.

Mythic ResonanceImagine an artifact that is not merely a product but a living apparatus for weaving destinies. This “loom” is forged from crystalline composites whose textures defy Euclidean geometry, their surfaces dynamically refracting light into impossible spectral gradients. Its panels merge the Fibonacci sequence with patterns reminiscent of Incan textiles and fractal spirals found in nature.Design & Materials: The structure shifts in color—iridescent opal intermingles with deep quantum magenta and neural-network teal—responding to the observer’s own genetic memory.Lighting & Composition: Ambient illumination is derived from filtered cosmic radiation, cascading over walls etched with forgotten Sumerian cuneiform and surreal motifs that recall the dreamlike landscapes of Dali and Escher combined.Narrative Implication: Here, destiny and memory become tangible, inviting characters (and viewers) to literally weave their fate into a tapestry that spans the multiverse.4th round:

”Not bad, but they all look AI generated. let's focus on a scene with 2 people in a room. Variate the time of day, room background, lighting, composition, angle, color grading, film stock, color palette, production design. Do not follow the typical cliches, go deep, be experimental and write 10 prompts.”

I did another 3 rounds, but there was still some slight AI feel in the outputs. You can see the whole process:

https://chatgpt.com/share/67a9c19d-5950-8001-9ac7-5b38f2e052c4

I switched to claude.ai hoping getting better results.

The images started to become more natural, a small step towards our goal. Finding new visual language for typical film scenes, a production design / art direction explorations without the cliches.

I wanted Claude to summarize she understands the task:

The key is not to rely on technical tricks or excessive stylization, but to develop a coherent visual language that feels both fresh and purposeful. The most memorable visual experiences often come from finding new ways to see familiar things, rather than creating completely artificial environments.The goal is to make artistic choices that serve the story and enhance the viewer's emotional connection, rather than just being different for the sake of being different. When done well, these techniques become invisible to the casual viewer while creating a distinctive atmosphere that defines the work.Claude even defined a name for the methodology:

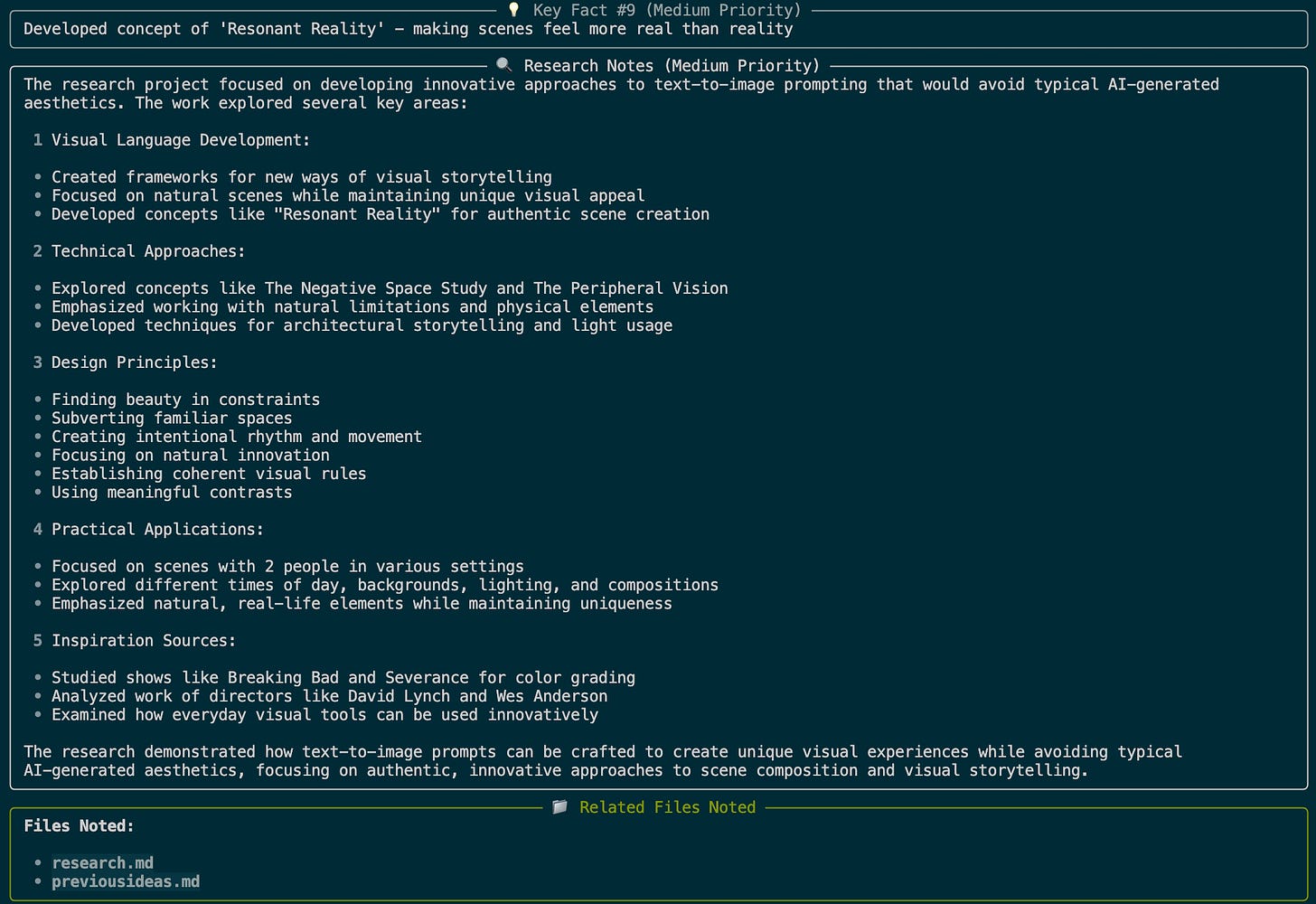

The goal is to create what might be called Resonant Reality—a world that feels more real than reality itself, not through exaggeration or stylization, but through careful attention to how we actually experience space, time, and human interaction.

I always wanted to try out and explore agentic research – after seeing the demos live on Friday afternoons at Hackerspace – I was sure that we can achieve better results when using a sophisticated, near AGI – autonomous research agents working towards a defined goal. I prepared a documentation for sparc https://github.com/ruvnet/sparc – open source python app by Reuven Cohen.

But you could use OpenAI’s chatGPT Pro deep research (Pro only currently) or LionAGI by Ocean Li https://github.com/lion-agi/lionagi

I added everything I learned earlier about prompting with chatGPT and Claude. Have a look at the docs on my github: https://github.com/bencium/agentic-design

Next, I instructed sparc with a setting to get the first quick results. Imagine the results if I let it research for hours… See the images below I made based on the prompts sparc generated for me under 5 minutes. Here is a sample prompt for the agentic framework (in this case, sparc):

sparc -m "Conduct an expert-level analysis of image prompting techniques based on documentation in the current folder. Create a markdown report when you are ready." \ --hil^ This is the real time feedback in terminal as sparc is automatically creating the research document.

sparc even suggested to use sound as a new way for storytelling, which is particularly interesting.

The Sound Shadow: We see people only through the way they interrupt ambient sound. Their presence creates acoustic shadows in the environment's natural soundscape, revealed through subtle visual ripples in the air. Their emotional states manifest in how these disturbances interact—anger creates sharp disruptions, while intimacy results in harmonious wave patterns.With Elevenlabs, I generated some samples right away:

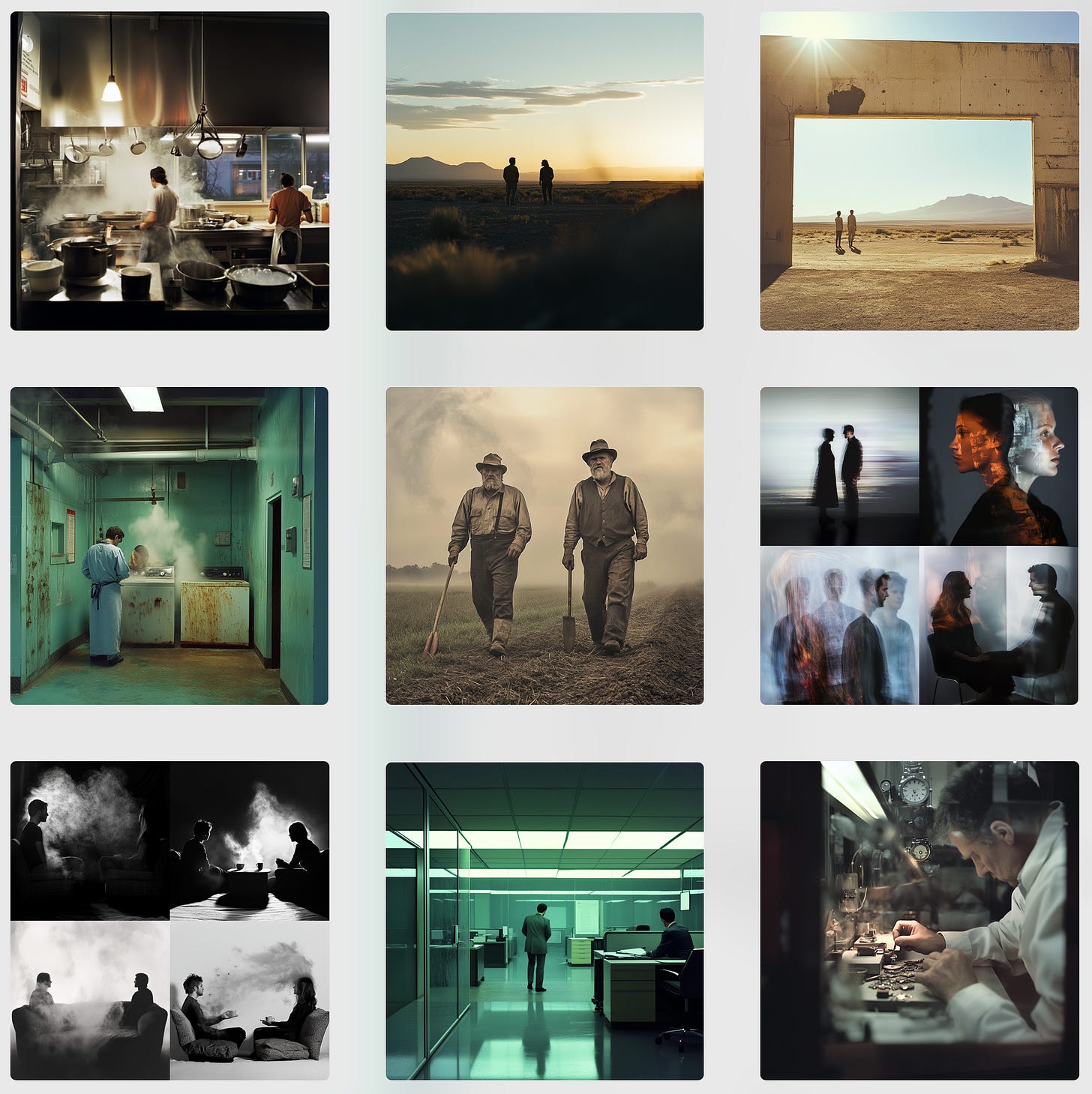

See few selected images to demonstrate the power of agentic research and image prompting:

About Agentic Prompting:

Separates research, planning, and implementation into distinct phases

Maintains context and knowledge across stages

Seamlessly combines multiple AI capabilities

Maintains context across sessions

Learns from previous interactions

Builds cumulative project understanding

The methodology focuses on creating authentically natural scenes while avoiding typical AI-generated aesthetics.

Rather than relying on conventional AI-art tropes, the methodology emphasizes architectural storytelling and uses light as a narrative element.

Two standout techniques have emerged from this research:

1 The Negative Space Study: This approach revolutionizes scene composition by focusing on the spaces between elements, creating subtle yet powerful visual narratives through absence rather than presence.

2 The Peripheral Vision Technique: This innovative method captures scenes from the edge of human sight, utilizing natural distortion and color desaturation to create more authentic, instinctive visual experiences.

What makes this approach great: emphasis on finding beauty in constraints and subverting familiar spaces. Rather than fighting against limitations, the methodology embraces them as creative tools, resulting in more efficient and authentic content generation.

Let me know if you want to a similar, custom agentic research system for your creative agency or film production business. For teams looking to enhance their visual development workflow, our tools offer a powerful combination of AI capabilities while maintaining human oversight and control. The automatic process grows smarter with your project.